Python’s documentation is comprehensive to put it lightly, and logging is no exception. When looking at how to effectively manage logging across a project composed of multiple modules it’s not a trivial task to review the documentation and come up with a concise solution, and it’s no better when searching Stack Overflow for practical examples either. Add on to that the modern advice of avoiding using the root logger where possible and a lot of unofficial by-example documentation available is stale at best, broken at worst.

This isn’t intended to be an equally-comprehensive article about the ins and outs of Python Logging, but instead be a quick rundown of how to move from individual logging-per-module to something more cohesive. Who knows, maybe I’ll convince someone that logging in general isn’t as onerous as it might seem?

The Problem

You have an application composed of a main.py core application that imports one or more project-managed modules to handle specific tasks, and want to have your logging configuration consistent across all modules.

But some modules may matter more than others, some may have different levels of logging required, or it could be that modifying a module to update logging settings is difficult after it’s locked or frozen for distribution.

There’s a few ways to address this, to varying degrees:

- Use the root logger in your main python file and all modules, customizing as needed. This ticks some boxes, but doesn’t give fine-grained control over the logs of each module.

python -c "import logging; logging.error('Error!')" - Create a custom logger in your main python file and all modules, customized for the log level and output type you need per-module. This does give fine-grained control over the logs of each module, but can be bulky – either boilerplate at the top of each file, multiple configuration files, or even multiple log files which may not be ideal.

python -c "import logging; logger = logging.getLogger(__name__); logging.basicConfig(level=logging.DEBUG); logger.error('Error!')" - Create a base logger with a hierarchy for modules, allowing for selective overrides. This is the option this article looks at.

The Solution

As shared in the list above there are options that partially (and potentially fully) meet the functional requirement of logging across modules, but only one of them keeps boilerplate to a minimum and allows core logging changes to cascade down to modules with minimal effort – option #3.

With that in mind, let’s first create a base logger configuration file with log levels and formatters to match our requirements – in this case we include both a console and file handler so that logs are written to the terminal and to a separate app.log file in the application directory. The logger will be named baseLogger, but you can choose any name you’d like as long as it’s consistent in the logging.conf file, your main.py file and all modules:

# logging.conf

[loggers]

keys = root,baseLogger

[handlers]

keys = consoleHandler, fileHandler

[formatters]

keys = baseFormatter

[formatter_baseFormatter]

format = %(asctime)s - %(name)s - %(levelname)s - %(message)s

[logger_root]

level=DEBUG

handlers=consoleHandler

[logger_baseLogger]

level = DEBUG

handlers = consoleHandler, fileHandler

qualname = baseLogger

propagate = 0

[handler_fileHandler]

class=FileHandler

formatter=baseFormatter

level=DEBUG

args=('app.log',)

[handler_consoleHandler]

class = StreamHandler

level = DEBUG

formatter = baseFormatter

args = (sys.stdout,)

This file can be directly loaded by the built-in python logging module, through the config.fileConfig function: logging.config.fileConfig(fname="logging.conf")

It’s not entirely intuitive, but you need to include the root logger and set a handler (in our case the console handler) for the config to be accepted. I won’t go into detail about the contents and format of this file as the PSF has done a far better job than I ever could, but I’ll call out that the important parts for the purpose of this article are the non-root logger name (baseLogger) and that in this instance we have two handlers:

- fileHandler: Writes log entries to the app.log file.

- consoleHandler: Writes log output directly to the console

- baseFormatter: Using %(name)s here will show us the name of the logger in the log entry

For the main application we’re loading log configuration from file (logging.conf) and having the sole function be to output messages through the logging system. Note that we’re importing module1 in this file, and code for this module is provided later.

# main.py

import logging

import logging.config

import module1

# load logging settings from file

logging.config.fileConfig(fname="logging.conf")

# get instance of baseLogger logger

logger = logging.getLogger("baseLogger")

def main():

logger.info("Starting work. Level set to DEBUG from config file")

# log level inherited from baseLogger, DEBUG

# expect to see log entries at DEBUG and WARNING level

module1.do_work("Greg")

# change logLevel for module1 to WARN

logger.info("Setting module1 level to WARN")

logger.getChild("module1").setLevel(logging.WARN)

# log level set directly on module1, WARN

# expect to see log entries at WARNING level only

module1.do_work("Harry")

logger.info("baseLogger INFO messages are still logged")

logger.debug("baseLogger DEBUG messages are still logged")

if __name__ == "__main__":

main()

This application when run will:

- Import logging configuration from file, and select the baseLogger logger from that configuration

- Generate start and end logs for the application showing that INFO and DEBUG logs are generated

- Call do_work() once, without changing any logger configuration

- Change the baseLogger.module1 log level from DEBUG to WARN

- Call do_work() a second time to confirm that previously-seen INFO logs are no longer seen after raising the level to WARN

In module1 the key requirement is that we use dot notation to create a logging hierarchy, in this example the base logger is named baseLogger and we’ll use the imported module’s __name__ as a suffix to produce its own unique name: baseLogger.module1. Note that we don’t need to load the logging configuration file here, the configuration is inherited from the baseLogger logger configured in main.py.

This module has a single function do_work(person) which will call the logger twice, once with a DEBUG level item and a second time with a WARN level item:

# module1.py

import logging

# logger name will be 'baseLogger.module1'

logger = logging.getLogger(f"baseLogger.{__name__}")

def do_work(person):

logger.debug(f"{person} has been very good today")

logger.warning(f"{person} has actually been very bad")

This module serves a single purpose – to generate DEBUG and WARN logs when do_work() is called.

There is unfortunately no programmatic way that I know of to get the name of the parent logger (baseLogger) so we’re specifying it by name in this example and joining it with the modules __name__ using an f-string.

That should be all that’s needed for this example – you can now run main.py and look for either the console output or output in the app.log file.

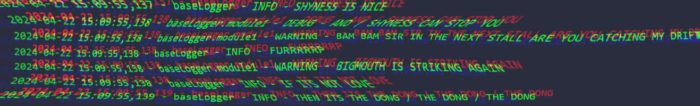

You will see something similar to the following:

2024-04-22 15:09:55,137 - baseLogger - INFO - Starting work. Level set to DEBUG

2024-04-22 15:09:55,138 - baseLogger.module1 - DEBUG - Greg has been very good today

2024-04-22 15:09:55,138 - baseLogger.module1 - WARNING - Greg has actually been very bad

2024-04-22 15:09:55,138 - baseLogger - INFO - Setting module1 level to WARN

2024-04-22 15:09:55,138 - baseLogger.module1 - WARNING - Harry has actually been very bad

2024-04-22 15:09:55,138 - baseLogger - INFO - baseLogger INFO messages are still logged

2024-04-22 15:09:55,139 - baseLogger - DEBUG - baseLogger DEBUG messages are still loggedFrom the output you can see that:

- INFO level logs are initially in scope for the baseLogger logger.

- DEBUG level logs are initially in scope for the baseLogger.module1 child logger during the first do_work() call.

- After setting the baseLogger.module1 log level to WARN, DEBUG level logs from module1 are no longer emitted.

- INFO and DEBUG level logs are still in scope for the baseLogger logger.

From this we can see that a single logging configuration can support logging from multiple locations, and that post-load the log level can be changed for modules without impacting the log level at the base. This would also apply to module log level changes not impacting other modules logging, though not shown in this example.

An obvious benefit to this solution over others is that it’s relatively portable between projects – you can copy the logging.conf file and unless you have a requirement for a unique logger name you can freely load and use this as-is. Once you have your logging requirements locked in it’s not necessary to change configuration files, and if you do they should be near-drop-in replacements across your projects provided the base logger name hasn’t changed.

Hopefully this helps someone, anyone? Bueller?